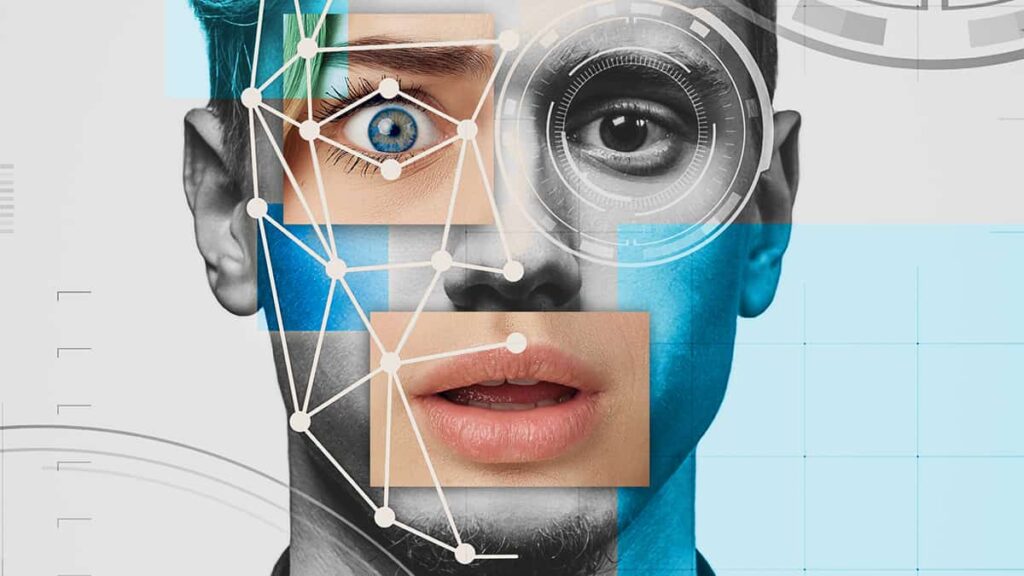

Lately, deepfake expertise has emerged as a groundbreaking utility of synthetic intelligence (AI) that raises each fascination and concern. Deepfakes check with artificial media, usually manipulated audio and video content material, created utilizing superior machine studying algorithms. On this article, we delve into the intricacies of deepfake expertise, exploring the way it generates artificial media and inspecting the moral and societal implications of this quickly evolving subject.

Understanding Deepfake Expertise

Deepfake expertise makes use of deep studying algorithms, significantly generative adversarial networks (GANs), to create practical but fabricated audio and video content material. GANs encompass two neural networks: a generator community that generates the artificial media and a discriminator community that evaluates the authenticity of the media. By way of an iterative coaching course of, the generator learns to supply more and more convincing deepfakes whereas the discriminator learns to differentiate between actual and pretend content material.

The method of producing a deepfake includes a number of steps. Initially, a dataset comprising hundreds of photos or movies is fed into the AI mannequin for coaching. The mannequin learns patterns, facial options, and voice traits from the dataset. As soon as skilled, the mannequin can generate artificial media by swapping faces, altering expressions, and even manipulating speech.

Moral Considerations and Misuse

Deepfake expertise has raised important moral issues. It has the potential to be misused for malicious functions, equivalent to spreading disinformation, defaming people, or impersonating public figures. Deepfake movies can convincingly depict somebody saying or doing issues they by no means did, resulting in reputational injury, privateness invasion, and the erosion of belief in media and data.

The rise of deepfakes poses important challenges for journalism and media integrity. With the flexibility to create convincing faux information movies, deepfakes can undermine the credibility of official information sources. Journalists and media shoppers have to be vigilant in verifying the authenticity of the content material and depend on trusted sources to fight the unfold of misinformation. Deepfakes increase critical issues concerning privateness and consent. Manipulating somebody’s likeness with out their consent infringes upon their privateness rights and might have extreme psychological, social, and authorized penalties. Efforts to guard people from deepfake abuse necessitate sturdy laws and technological countermeasures.

Addressing Challenges & Advantages

Addressing the challenges posed by deepfake expertise requires a multi-faceted strategy. Technological options, equivalent to superior detection algorithms, watermarking strategies, and media authentication mechanisms, are being developed to establish and mitigate deepfake content material. Moreover, elevating consciousness, media literacy, and demanding pondering abilities among the many basic public may help reduce the affect of deepfake manipulation.

Regardless of the moral issues, deepfake expertise additionally provides potential helpful functions. It may be used within the leisure, filmmaking, and gaming industries to create practical particular results and digital avatars. Deepfakes have the potential to revolutionize digital actuality experiences, improve dubbing or localization processes, and open up new inventive potentialities. As the sector of deepfakes continues to evolve, the event of regulatory frameworks turns into crucial. Governments and policymakers have to collaborate with expertise consultants to determine authorized tips that defend people’ rights, protect media integrity, and foster accountable use of artificial media expertise.

The Takeaway

Deepfake expertise presents a double-edged sword, providing thrilling potentialities whereas elevating critical moral and societal issues. As we navigate this evolving panorama, it’s essential to strike a stability between innovation and safeguarding towards misuse. By addressing the moral implications, selling media literacy, and fostering collaboration amongst expertise builders, policymakers, and society at massive, we will harness the potential of deepfake expertise whereas defending the integrity of our digital world.