Three years in the past Zoom settled with the FTC over a declare of misleading advertising and marketing round safety claims, having been accused of overstating the energy of the encryption it provided. Now the videoconferencing platform could possibly be headed for the same tangle in Europe in relation to its privateness small print.

The latest phrases & circumstances controversy sequence goes like this: A clause added to Zoom’s legalese again in March 2023 grabbed consideration on Monday after a post on Hacker News claimed it allowed the corporate to make use of buyer knowledge to coach AI fashions “with no choose out”. Cue outrage on social media.

Though, on nearer inspection, some pundits instructed the no choose out utilized solely to “service generated knowledge” (telemetry knowledge, product utilization knowledge, diagnostics knowledge and many others), i.e. moderately than all the pieces Zoom’s prospects are doing and saying on the platform.

Nonetheless, folks had been mad. Conferences are, in spite of everything, painful sufficient already with out the prospect of a few of your “inputs” being repurposed to feed AI fashions that may even — in our fast-accelerating AI-generated future — find yourself making your job redundant.

The related clauses from Zoom’s T&Cs are 10.2 by 10.4 (screengrabbed beneath). Observe the bolded final line emphasizing the consent declare associated to processing “audio, video or chat buyer content material” for AI mannequin coaching — which comes after a wall of textual content the place customers getting into into the contractual settlement with Zoom decide to grant it expansive rights for all different forms of utilization knowledge (and different, non-AI coaching functions too):

Screengrab: Natasha Lomas/TechCrunch

Setting apart the apparent reputational dangers sparked by righteous buyer anger, sure privacy-related authorized necessities apply to Zoom within the European Union the place regional knowledge safety legal guidelines are in drive. So there are authorized dangers at play for Zoom, too.

The related legal guidelines listed below are the Normal Knowledge Safety Regulation (GDPR), which applies when private knowledge is processed and provides folks a set of rights over what’s accomplished with their info; and the ePrivacy Directive, an older piece of pan-EU laws which offers with privateness in digital comms.

Beforehand ePrivacy was centered on conventional telecoms providers however the regulation was modified on the finish of 2020, by way of the European Electronic Communications Code, to increase confidentiality duties to so-called over-the-top providers corresponding to Zoom. So Article 5 of the Directive — which prohibits “listening, tapping, storage or different kinds of interception or surveillance of communications and the associated site visitors knowledge by individuals aside from customers, with out the consent of the customers involved” — seems to be extremely related right here.

Consent declare

Rewinding just a little, Zoom responded to the ballooning controversy over its T&Cs by pushing out an replace — together with the bolded consent be aware within the screengrab above — which it additionally claimed, in an accompanying blog post, “verify[s] that we’ll not use audio, video, or chat buyer content material to coach our synthetic intelligence fashions with out your consent”.

Its weblog publish is written within the standard meandering corpspeak — peppered with claims of dedication to transparency however with out Zoom clearly addressing buyer considerations about its knowledge use. As a substitute its disaster PR response wafts in sufficient self-serving side-chatter and product jargon to haze the view. The upshot is a publish obtuse sufficient to go away a common reader nonetheless scratching their head over what’s really happening. Which is named ‘capturing your self within the foot’ while you’re dealing with a backlash trigged by apparently contradictory statements in your communications. It might probably additionally suggest an organization has one thing to cover.

Zoom wasn’t any clearer when TechCrunch contacted it with questions on its data-for-AI processing in an EU regulation context; failing to supply us with straight solutions to queries concerning the authorized foundation it’s counting on for processing to coach AI fashions on regional customers’ knowledge; and even, initially, to substantiate whether or not entry to the generative AI options it gives, corresponding to an automatic assembly abstract device, relies on the person consenting to their knowledge getting used as AI coaching fodder.

At this level its spokesperson simply reiterated its line that: “Per the up to date weblog and clarified within the ToS — We’ve additional up to date the terms of service (in part 10.4) to make clear/verify that we is not going to use audio, video, or chat Buyer Content material to coach our synthetic intelligence fashions with out buyer consent.” [emphasis its]

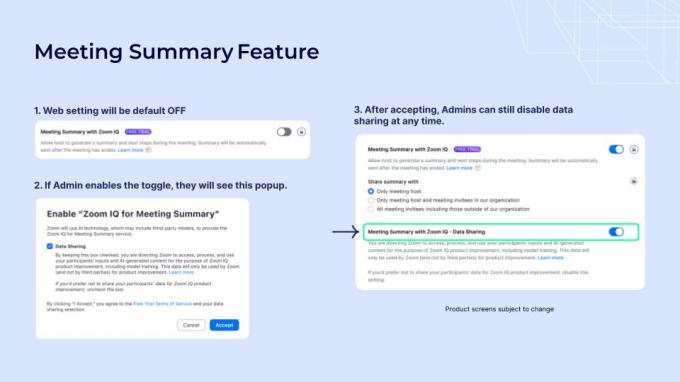

Zoom’s weblog publish, which is attributed to chief product officer Smita Hashim, goes on to debate some examples of the way it apparently gathers “consent”: Depicting a collection of menus it might present to account house owners or directors; and a pop-up it says is exhibited to assembly members when the aforementioned (AI-powered) Assembly Abstract function is enabled by an admin.

Within the case of the primary group (admins/account holders) Hashim’s publish actually states that they “present consent”. This wording, coupled with what’s written within the subsequent part — vis-a-vis assembly members receiving “discover” of what the admins have enabled/agreed to — implies Zoom is treating the method of acquiring consent as one thing that may be delegated to an admin on behalf of a bunch of individuals. Therefore the remainder of the group (i.e. assembly members) simply getting “discover” of the admin’s choice to activate AI-powered assembly summaries and provides it the inexperienced mild to coach AIs on their inputs.

Nonetheless the regulation on consent within the EU — if, certainly, that’s the authorized foundation Zoom is relying upon for this processing — doesn’t work like that. The GDPR requires a per particular person ask if you happen to’re claiming consent as your authorized foundation to course of private knowledge.

As famous above, ePrivacy additionally explicitly requires that digital comms be saved confidential except the person consents to interception (or except there’s some nationwide safety cause for the surveillance however Zoom coaching generative AI options doesn’t appear more likely to qualify for that).

Again to Zoom’s weblog publish: It refers back to the pop-up proven to assembly members as “discover” or “notification” that its generative AI providers are in use, with the corporate providing a short explainer that: “We inform you and your assembly members when Zoom’s generative AI providers are in use. Right here’s an instance [below graphic] of how we offer in-meeting notification.”

Picture credit: Zoom

But in its response to the data-for-AI controversy Zoom has repeatedly claimed it doesn’t course of buyer content material to coach its AIs with out their consent. So is that this pop-up only a “notification” that its AI-powered function has been enabled or a bona fide ask the place Zoom claims it obtains consent from prospects to this data-sharing? Frankly its description is in no way clear.

For the document, the textual content displayed on the discover pop-up reads* — and do be aware the usage of the previous tense within the title (which suggests knowledge sharing is already taking place):

Assembly Abstract has been enabled.

The account proprietor might enable Zoom to entry and use your inputs and AI-generated content material for the aim of offering the function and for Zoom IQ product enchancment, together with mannequin coaching. The information will solely be utilized by Zoom and never by third events for product enchancment. Study extra

We’ll ship the assembly abstract to invitees after the assembly ends (based mostly on the settings configured for the assembly). Anybody who receives the assembly abstract might save and share it with apps and others.

AI-generated consent could also be inaccurate or deceptive. At all times examine for accuracy.

Two choices are introduced to assembly members who see this discover. One is a button labelled “Obtained it!” (which is highlighted in vibrant blue so apparently pre-selected); the opposite is a button labelled “Go away assembly” (displayed in gray, so not the default choice). There’s additionally a hyperlink within the embedded textual content the place customers can click on to “be taught extra” (however, presumably, received’t be introduced with further choices vis-a-vis its processing of their inputs).

Free alternative vs free to go away…

Followers of European Union knowledge safety regulation can be aware of the requirement that for consent to be a legitimate authorized foundation for processing folks’s knowledge it should meet a sure normal — particularly: It should be clearly knowledgeable; freely given; and function restricted (particular, not bundled). Nor can or not it’s nudged with self-serving pre-selections.

These of us may additionally level out that Zoom’s discover to assembly members about its AI generated function being activated doesn’t present them with a free option to deny consent for his or her knowledge to turn out to be AI coaching fodder. (Certainly, judging by the tense used, it’s already processing their information for that by the point they see this discover.)

This a lot is apparent for the reason that assembly participant should both comply with their knowledge being utilized by Zoom for makes use of together with AI coaching or stop the assembly altogether. There are not any different selections accessible. And it goes with out saying that telling your customers the equal of ‘hey, you’re free to go away‘ doesn’t sum to a free alternative over what you’re doing with their knowledge. (See, for e.g.: The CJEU’s latest ruling towards Meta/Fb’s compelled consent.)

Zoom shouldn’t be even providing its customers the flexibility to pay it to keep away from this non-essential data-mining — which is a route some regional information publishers have taken by providing consent-to-tracking paywalls (the place the selection provided to readers is both to pay for entry to the journalism or comply with monitoring to get free entry). Though even that strategy seems to be questionable, from a GDPR equity perspective (and stays under legal challenge).

However the important thing level right here is that if consent is the authorized foundation claimed to course of private knowledge within the EU there should really be a free alternative accessible.

And a option to be within the assembly or not within the assembly shouldn’t be that. (Add to that, as a mere assembly participant — i.e. not an admin/account holder — such persons are unlikely to be probably the most senior particular person within the digital room — and withdrawing from a gathering you didn’t provoke/organize on knowledge ethics grounds might not really feel accessible to that many staff. There’s probably an influence imbalance between the assembly admin/organizer and the members, simply as there’s between Zoom the platform offering a communications service and Zoom’s customers needing to make use of its platform to speak.)

As if that wasn’t sufficient, Zoom may be very clearly bundling its processing of information for offering the generative AI function with different non-essential functions — corresponding to product enchancment and mannequin coaching. That appears like a straight-up contravention of the GDPR function limitation precept, which might additionally apply to ensure that consent to be legitimate.

However all of those analyses are solely related if Zoom is definitely counting on consent as its authorized foundation for the processing, as its PR response to the controversy appears to assert — or, not less than, it does in relation to processing buyer content material for coaching AI fashions.

After all we requested Zoom to substantiate its authorized foundation for the AI coaching processing within the EU however the firm prevented giving us a straight reply. Humorous that!

Pressed to justify its declare to be acquiring consent for such processing towards EU regulation consent requirements, a spokesman for the corporate despatched us the next (irrelevant and/or deceptive) bullet-points [again, emphasis its]:

- Zoom generative AI options are default off and individually enabled by prospects. Right here’s the press release from June 5 with extra particulars

- Clients management whether or not to allow these AI options for his or her accounts and may choose out of offering their content material to Zoom for mannequin coaching on the time of enablement

- Clients can change the account’s knowledge sharing choice at any time

- Moreover, for Zoom IQ Assembly Abstract, assembly members are given discover by way of a pop up when Assembly Abstract is turned on. They’ll then select to go away the assembly at any time. The assembly host can begin or cease a abstract at any time. Extra particulars can be found here

So Zoom’s defence of the consent it claims to supply is actually that it provides customers the selection to not use its service. (It ought to actually ask how effectively that form of argument went for Meta in entrance of Europe’s prime courtroom.)

Even the admin/account-holder consent stream Zoom does serve up is problematic. Its weblog publish doesn’t even explicitly describe this as a consent stream — it simply couches it an instance of “our UI by which a buyer admin opts in to one in every of our new generative AI options”, linguistically bundling opting into its generative AI with consent to share knowledge with it for AI coaching and many others.

Within the screengrab Zoom consists of within the weblog publish (which we’ve embedded beneath) the generative AI Assembly Abstract function is acknowledged in annotated textual content as being off by default — apparently requiring the admin/account holder to actively allow it. There’s additionally, seemingly, an express alternative related to the information sharing that’s introduced to the admin. (Observe the tiny blue examine field within the second menu.)

Nonetheless — if consent is the claimed authorized foundation — one other drawback is that this data-sharing field is pre-checked by default, thereby requiring the admin to take the lively step of unchecking it to ensure that knowledge to not be shared. So, in different phrases, Zoom could possibly be accused of deploying a darkish sample to try to drive consent from admins.

Below EU regulation, there’s additionally an onus to obviously inform customers of the aim you’re asking them to consent to.

However, on this case, if the assembly admin doesn’t rigorously learn Zoom’s small print — the place it specifies the information sharing function might be unchecked in the event that they don’t need these inputs for use by it for functions corresponding to coaching AI fashions — they could ‘agree’ accidentally (i.e. by failing to uncheck the field). Particularly as a busy admin would possibly simply assume they should have this “knowledge sharing” field checked to have the ability to share the assembly abstract with different members, as they are going to in all probability need to.

So even the standard of the ‘alternative’ Zoom is presenting to assembly admins seems to be problematic towards EU requirements for consent-based processing to fly.

Add to that, Zoom’s illustration of the UI admins get to see features a additional small print qualification — the place the corporate warns in fantastically tiny writing that “product screens topic to alter”. So, er, who is aware of what different language and/or design it might have deployed to make sure it’s getting principally affirmative responses to data-sharing person inputs for AI coaching to maximise its knowledge harvesting.

Picture credit: Zoom

However maintain your horses! Zoom isn’t really counting on consent as its authorized foundation to data-mine customers for AI, in line with Simon McGarr, a solicitor with Dublin-based regulation agency McGarr Solicitors. He suggests all of the consent theatre described above is actually a “purple herring” in EU regulation phrases — as a result of Zoom is counting on a unique authorized foundation for the AI knowledge mining: Efficiency of a contract.

“Consent is irrelevant and a purple herring as it’s counting on contract because the authorized foundation for processing,” he informed TechCrunch after we requested for his views on the authorized foundation query and Zoom’s strategy extra typically.

US legalese meets EU regulation

In McGarr’s evaluation, Zoom is making use of a US drafting to its legalese — which doesn’t take account of Europe’s (distinct) framework for knowledge safety.

“Zoom is approaching this by way of possession of private knowledge,” he argues. “There’s non private knowledge and private knowledge however they’re not distinguishing between these two. As a substitute they’re distinguishing between content material knowledge (“buyer content material knowledge”) and what they name telemetry knowledge. That’s metadata. Subsequently they’re approaching this with a framework that isn’t suitable with EU regulation. And that is what has led them to make assertions in respect of possession of information — you possibly can’t personal private knowledge. You may solely be both the controller or the processor. As a result of the particular person continues to have rights as the information topic.

“The declare that they will do what they like with metadata runs opposite to Article 4 of the GDPR which defines what’s private knowledge — and particularly runs opposite to the choice within the Digital Rights Eire case and a complete string of subsequent instances confirming that metadata might be, and incessantly is, private knowledge — and typically delicate private knowledge, as a result of it may possibly reveal relationships [e.g. trade union membership, legal counsel, a journalist’s sources etc].”

McGarr asserts that Zoom does want consent for such a processing to be lawful within the EU — each for metadata and buyer content material knowledge used to coach AI fashions — and that it may possibly’t really depend on efficiency of a contract for what is clearly non-essential processing.

However it additionally wants consent to be choose in, not choose out. So, principally, no pre-checked containers that solely an admin can uncheck, and with nothing however a imprecise “discover” despatched to different customers that basically forces them to consent after the very fact or stop; which isn’t a free and unbundled alternative beneath EU regulation.

“It’s a US form of strategy,” he provides of Zoom’s modus operandi. “It’s the discover strategy — the place you inform folks issues, and then you definitely say, effectively, I gave them discover of X. However, you already know, that isn’t how EU regulation works.”

Add to that, processing delicate private knowledge — which Zoom is more likely to be doing, even vis-a-vis “service generated knowledge” — requires an excellent larger bar of express consent. But — from an EU regulation perspective — all the corporate has provided to date in response to the T&Cs controversy is obfuscation and irrelevant excuses.

Pressed for a response on authorized foundation, and requested immediately if it’s counting on efficiency of a contract for the processing, a Zoom spokesman declined to supply us with a solution — saying solely: “We’ve logged your questions and can let you already know if we get anything to share.”

The corporate’s spokesman additionally didn’t reply to questions asking it to make clear the way it defines buyer “inputs” for the data-sharing alternative that (solely) admins get — so it’s nonetheless not solely clear whether or not “inputs” refers solely to buyer comms content material. However that does look like the implication from the bolded declare in its contract to not use “audio, video or chat Buyer Content material to coach our synthetic intelligence fashions with out your consent” (be aware, there’s no bolded point out of Zoom not utilizing buyer metadata for AI mannequin coaching).

If Zoom is excluding “service generated knowledge” (aka metadata) from even its choose out consent it appears to consider it may possibly assist itself to those alerts with out making use of even this legally meaningless theatre of consent. But, as McGarr factors out, “service generated knowledge” doesn’t get a carve out from EU regulation; it may possibly and infrequently is classed as private knowledge. So, really, Zoom does want consent (i.e. choose in, knowledgeable, particular and freely given consent) to course of customers’ metadata too.

And let’s not neglect ePrivacy has fewer accessible authorized bases than the GDPR — and explicitly requires consent for interception. Therefore authorized specialists’ conviction that Zoom can solely depend on (choose in) consent as its authorized foundation to make use of folks’s knowledge for coaching AIs.

A latest intervention by the Italian knowledge safety authority on OpenAI’s generative AI chatbot service, ChatGPT seems to have arrived at an analogous view on use of information for AI mannequin coaching — for the reason that authority stipulated that OpenAI can’t depend on efficiency of a contract to course of private knowledge for that. It mentioned the AI big must select between consent or respectable pursuits for processing folks’s knowledge for coaching fashions. OpenAI later resumed service in Italy having switched to a declare of respectable pursuits — which requires it to supply customers a technique to choose out of the processing (which it had added).

For AI chatbots, the authorized foundation for mannequin coaching query stays beneath investigation by EU regulators.

However, in Zoom’s case, the important thing distinction is that for comms providers it’s not simply GDPR however ePrivacy that applies — and the latter doesn’t enable LI for use for monitoring.

Zooming to catch up

Given the comparatively novelty of generative AI providers, to not point out the massive hype round data-driven automation options, Zoom could also be hoping its personal data-mining for AI will fly quietly beneath worldwide regulators’ radar. Or it might simply be centered elsewhere.

There’s little question the corporate is feeling beneath stress competitively — after what had, in recent times, been surging world demand for digital conferences falling off a cliff since we handed the height of COVID-19 and rushed again to in-person handshakes.

Add to that the rise of generative AI giants like OpenAI is clearly dialling up competitors for productiveness instruments by massively scaling entry to new layers of AI capabilities. And Zoom has solely comparatively lately made its personal play to affix the generative AI race, asserting it might dial up funding back in February — after posting its first fourth quarter internet loss since 2018 (and shortly after asserting a 15% headcount discount).

There’s additionally already no scarcity of competitors for videoconferencing — with tech giants like Google and Microsoft providing their very own comms device suites with videochatting baked in. Plus much more rivalry is accelerating down the pipes as startups faucet up generative AI APIs to layer additional options on vanilla instruments like videoconferencing — which is driving additional commodification of the core platform element.

All of which is to say that Zoom is probably going feeling the warmth. And possibly in a higher rush to coach up its personal AI fashions so it may possibly race to compete than it’s to ship its expanded knowledge sharing T&Cs for worldwide authorized assessment.

European privateness regulators additionally don’t essentially transfer that rapidly in response to rising techs. So Zoom might really feel it may possibly take the danger.

Nonetheless there’s a regulatory curve ball in that Zoom doesn’t look like major established in any EU Member State.

It does have an area EMEA workplace within the Netherlands — however the Dutch DPA informed us it’s not the lead supervisory authority for Zoom. Nor does the Irish DPA look like (regardless of Zoom claiming a Dublin-based Article 27 consultant).

“So far as we’re conscious, Zoom doesn’t have a lead supervisory authority within the European Financial Space,” a spokesman for the Dutch DPA informed TechCrunch. “In accordance with their privateness assertion the controller is Zoom Video Communications, Inc, which is predicated in the US. Though Zoom does have an workplace within the Netherlands, evidently the workplace doesn’t have decision-making authority and due to this fact the Dutch DPA shouldn’t be lead supervisory authority.”

If that’s appropriate, and decision-making in relation to EU customers knowledge takes place solely over the pond (inside Zoom’s US entity), any knowledge safety authority within the EU is probably competent to interrogate its compliance with the GDPR — moderately than native complaints and considerations having to be routed by a single lead authority. Which maximizes the regulatory danger since any EU DPA may make an intervention if it believes person knowledge is being put in danger.

Add to that, ePrivacy doesn’t include a one-stop-shop mechanism to streamline regulatory oversight because the GDPR does — so it’s already the case that any authority may probe Zoom’s compliance with that directive.

The GDPR permits for fines that may attain as much as 4% of world annual turnover. Whereas ePrivacy lets authority set appropriately dissuasive fines (which within the French CNIL’s case has led to a number of hefty multi-million greenback penalties on numerous tech giants in relation to cooking monitoring infringements in recent times).

So a public backlash by customers offended at sweeping data-for-AI T&Cs might trigger Zoom extra of a headache than it thinks.

*NB: The standard of the graphic on Zoom’s weblog was poor with textual content showing considerably pixellated, making it arduous to pick-out the phrases with out cross-checking them elsewhere (which we did)